On May 12th 2022, the UK Parliament published its Draft Online Safety Bill, moving one step closer to this regulation’s implementation. The Bill aims to ensure that a framework for online regulation is created, allowing for the upholding of freedom of expression online while also ensuring that people are protected from harmful content.

In its current form, the Online Safety Bill seeks to ensure that a duty of care is maintained by online platformsAn online platform refers to a digital service that enables interactions between two or more sets of users who are distinct but interdependent and use the service to communicate via the internet. The phrase "online platform" is a broad term used to refer to various internet services such as marketplaces, search engines, social media, etc. In the DSA, online platforms... More towards their users, and that they will take action against both illegal content online, such as terrorist content, child sexual abuse material (CSAM)Child Sexual Abuse Material refers to any material that portrays sexual activity involving a person who is below the legal age of consent. To accurately describe the exploitation and abuse of children and protect the dignity of victims, the European Parliament recommends using the term "child sexual abuse material" instead of "child pornography." More, priority categories of illegal content (likely to include offences such as the sale of illegal items or services, revenge pornography, and hate crimes), as well as ‘legal but harmful’ content (such as cyberbullying).

Who does the Online Safety Bill apply to?

The OSB primarily applies to online services that have links with the UK. To fall into scope, they must do one of the following:

- Allow online user interactions;

- Allow user-generated content on their platforms;

- Provide search services (also known as search engines).

A service is seen to have “links with the UK” if it does one of the following:

- It has a significant number of UK users; OR

- UK users form one of or the only target markets for the service; OR

- The service can be used in the UK and there is potential harm to UK users presented by the content.

What obligations does the Online Safety Bill set out?

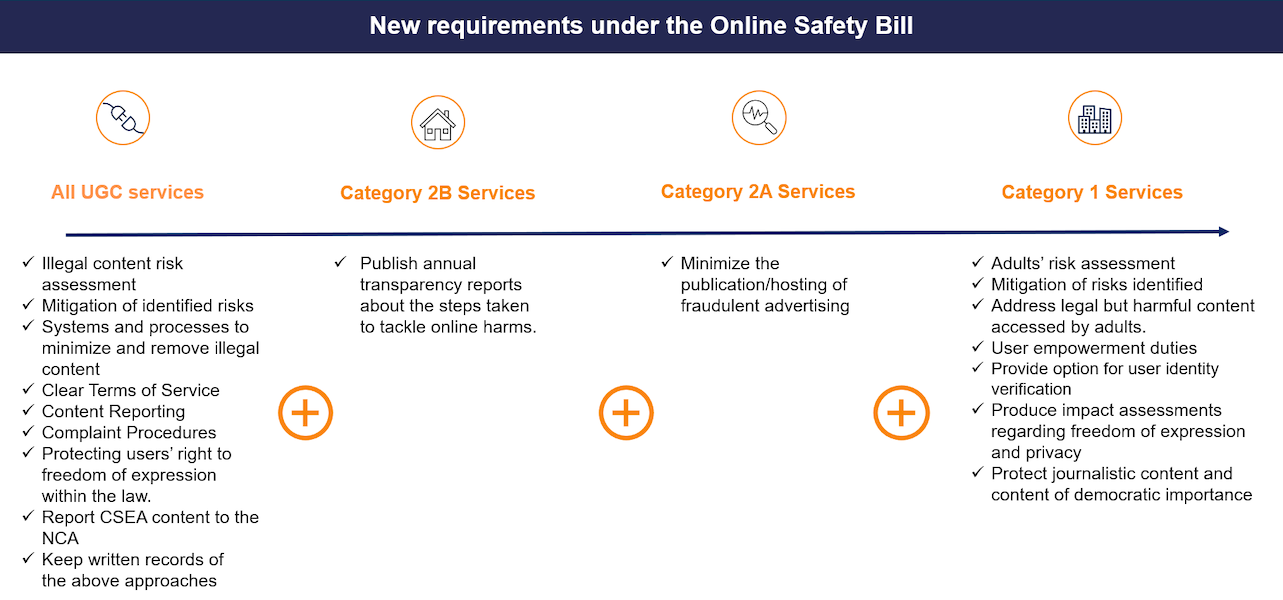

The Online Safety Bill has set out proportional requirements for the companies within its scope. The Office of Communications (OFCOM) acts as the UK’s telecommunications regulatory body and will decide the categorization of companies into the following three groups (listed in order from the fewest to the greatest number of obligations):

Category 2B – User-to-user services that do not meet the threshold conditions of Category 1 (see below).

Category 2A – Regulated search services

Category 1 – The largest user-to-user services

The specific thresholds for the Categories will be set out in secondary legislation and will depend on the size of the platform (in users), its functionalities, and the resulting potential risk of harm it holds.

How does the Online Safety Bill protect minors?

The Online Safety Bill maintains a heavy focus on ensuring greater safety for children online, this is done by ensuring that a ‘duty of care’ is adopted by all sites towards children. Platforms that are likely to be used by children will also have to protect young people from legal but harmful content such as eating-disorder or self-harm content.

Online services will be required to allow for easy reporting of harmful content, and act on such reports quickly. Furthermore, services will be required to report any Child Sexual Exploitation and Abuse to the National Crime Agency.

Who will be enforcing the Online Safety Bill?

Under the OSB, OFCOM will be tasked to supervise the providers of online services and the enforcement of the regulation. OFCOM will have the power to open investigations, carry out inspections, penalize infringements, impose fines or periodic penalty payments, as well as request the temporary restriction of the service in case of a continued or serious offense. Furthermore, OFCOM will be required to categorize services, publish codes of practices, establish an appealIn the content moderation space, an appeal is a process whereby a user who is impacted by a company's decision can contest the decision by requesting it to be reviewed. The company's decision can range from disabling a user account, to denying access to certain services. This can involve requesting a review of the decision by another party within the... More and super-complaint function, and establish mechanisms for user advocacy.

Failure to comply with obligations can result in fines of £18 million or 10% of qualifying worldwide revenue, whichever is higher. Furthermore, criminal proceedings can be brought against named senior managers of offending companies that fail to comply with information notices from OFCOM.

What does the Online Safety Bill mean for your business?

The OSB imposes a number of new obligations for online service providers and introduces hefty fines to ensure compliance. To avoid these, providers of online services must implement a number of operational changes.

Most immediately, providers need to ensure they have well-designed and easy-to-use notice-and-action and complaint-handling mechanisms in place. They also need to take measures to assess the levels of risk on their platform and keep records of their assessments. It is of great importance to ensure that systems and processes are implemented to ensure that the free expression of journalistic and democratic content is protected. Strong processes, supported by technology, are key for this.

Additionally, search services and category 1 platforms will be subject to specific rules in order to minimize the publication or hosting of fraudulent advertising. Finally, the protection of minors is central to the regulation and providers will have to implement child protection measures such as age verificationAge verification is a process used by websites or online services to confirm that a user meets a specific minimum age requirement. Age verification is typically used in jurisdictions where laws prohibit minors from accessing certain online content or services, such as gambling, pornography, or alcohol sales. More and risk assessments into their platforms.

Concretely, providers of online services will have to adopt a streamlined set of processes that allow for continuous compliance, notably with obligations such as transparency reporting.

Next steps

It is important to note that the current version of the Online Safety Bill is a draft and is subject to change. On the 7th of September, Prime Minister Liz Truss confirmed that the government would continue with the Bill, with “some tweaks”. One such item in the Bill which is under heavy scrutiny is its focus on ‘Legal but Harmful’ content, with opponents stating that such a point goes against free speech laws. To that end, it is essential for organisations that may be impacted to start assessing their platforms in order to ensure compliance when the Online Safety Bill does eventually come into force.

How can Tremau help you?

Tremau’s solution provides a single trust & safety content moderationReviewing user-generated content to ensure that it complies with a platform’s T&C as well as with legal guidelines. See also: Content Moderator More platform that prioritizes compliance as a service and integrates workflow automation and other AI tools. The platform ensures that providers of online services can respect all OSB requirements while improving their key trust & safetyThe field and practices that manage challenges related to content- and conduct-related risk, including but not limited to consideration of safety-by-design, product governance, risk assessment, detection, response, quality assurance, and transparency. See also: Safety by design More performance metrics, protecting their brands, increasing handling capacity, as well as reducing their administrative and reporting burden.

To find out more, contact us at info@tremau.com.

Tremau Policy Research Team